Java IEEE 2012 Projects List:

- Cloud Data Production for Masses (Cloud Computing)

- Cooperative Provable Data Possession for Integrity Verification in Multi-Cloud Storage (Cloud & ParallelAnd Distributed)

- Ensuring Distributed Accountability for Data Sharing in the Cloud (Secure Computing)

- Game-Theoretic Pricing for Video Streaming in Mobile Networks (Image Processing)

- Learn to Personalized Image Search from the Photo Sharing Websites (Multimedia & ImageProcessing)

- On Optimizing Overlay Topologies for Search in Unstructured Peer-to-Peer Networks (Parallel AndDistributed)

- Online Modeling of Proactive Moderation System for Auction Fraud Detection (Network security)

- Packet-Hiding Methods for Preventing Selective Jamming Attacks (Secure Computing)

- Self Adaptive Contention Aware Routing Protocol for Intermittently Connected Mobile Networks (Parallel AndDistributed)

- Trust Modeling in Social Tagging of Multimedia Content (Image Processing)

- Efficient Fuzzy Type-Ahead Search in XML Data (Knowledge & DataEngineering)

- Fast Data Collection in Tree-Based Wireless Sensor Networks (Mobile Computing)

- Footprint Detecting Sybil Attacks in Urban Vehicular Networks (Parallel AndDistributed)

- Handwritten Chinese Text Recognition by Integrating Multiple Contexts (Pattern Analysis & Machine Intelligence)

- Multiparty Access Control For Online Social Networks: Model and Mechanisms (Knowledge & DataEngineering)

- Organizing User Search Histories (Knowledge & DataEngineering)

- Ranking Model Adaptation For Domain-Specific Search (Knowledge & DataEngineering)

- Risk Aware Mitigation (Secure Computing)

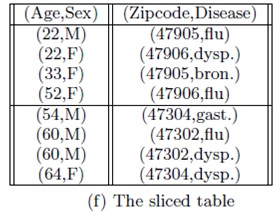

- Slicing: A New Approach to Privacy Preserving (Knowledge & DataEngineering)

- Secured Mobile Messaging